Building an eLearning gamelet - QA and Accessibility testing

With the gamelet built, it's time to put it through a rigorous process to ensure that it passes our Quality Assurance so it doesn't have any bugs in it and, importantly, meets strict accessibility criteria.

What is accessibility testing anyway?

For those readers that have a good grasp of accessibility, you can probably skip over this part. If you don’t know your interactive elements from your focus orders, this could be helpful though!

It is best practice to make sure that content doesn’t discriminate against people that have visual or hearing impairments and so need extra tools to be able to access what is being shown on the screen or played through a speaker. There is a set of guidelines called the Web Content Accessibility Guidelines, which developers of any digital content can build to. WCAG has a series of criteria that web content - whether it is a website, mobile app or learning game - has to meet and A, AA and AAA bands to show the extent of the applications accessibility compliance.

For many organisations, WCAG goes beyond best practice and is actually a legal requirement. If you are operating in many parts of the UK’s public sector for example, any digital product will need to be compliant to pass through the procurement process. There is lots of content out there about WCAG, or let the team know if you have any questions - we’re glad to help where we can.

The impact of accessibility on our gamelet

From the original scoping, the goal was that the gamelet be fully accessible so that no user would be disadvantaged by interacting with it. This brought a series of considerations since our goal was to use mechanics that play like a game, but design it in a way where the experience was the same for all learners:

Visual learner: sees a game play out as they get answers right/wrong

Non-visual learner: hears a narrative that describes how the game is playing out

Keyboard/Mouse user: no need for fine motor skills or timed actions (such as jumping), since they just need to get the answer right and the gamelet does the rest!

Learner who likes to watch: can see the gamelet play out based on their actions

Learner who likes to listen: can hear the narration alongside seeing the gamelet play out

Learner who likes to listen but struggles to hear every word: can activate closed captions so they can see, hear and read what is happening

Our Accessibility testing included consideration for whether we have the appropriate actions that a learner would need to enjoy the experience. In this case, these were:

Activate closed captions

Activate narration

Pause or play to play at their pace

Is it a good experience with a screen reader?

Is everything read out that’s needed

Are decorative items not read out?

Is anything visualised (instead of explained with text) read out?

Can pages be navigated easily and appropriately?

Can you focus on the page in the correct order?

Are questions announced correctly?

Are we using the correct colours?

Is colour contrast good?

Note: our logo and the buttons still fail colour contrast, but we’ve got an idea of how to keep these but have an “accessible contrast” option available to allow for the colours to change.

Testing the gamelet for accessibility

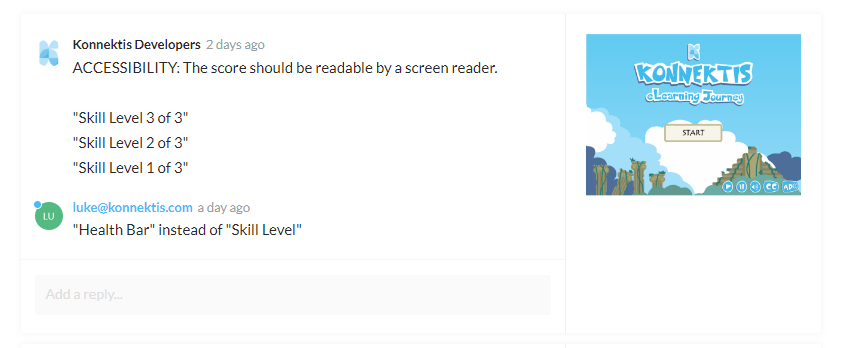

Having built the gamelet using Storyline, we used Articulate’s 360 review tool to streamline the QA and review process. We find this tool great because comments get recorded live as the tester plays through the gamelet but don’t worry if you aren’t using Storyline as other authoring tools have similar features. We use Lectora a lot for builds with some large corporate clients and find that the ReviewLink feature works well when it comes to collating and actioning feedback from games testers.

Whatever tool we are using, it is important that the eLearning developer can see exactly what the user is seeing and that they get a screenshot to check as well.

We may follow-up in more detail in a future blog (let us know if you would like more information in the meantime) but the most Important areas in our QA process are to check:

Button functionality - Do they all work as intended, or are any parts of the game broken?

Missing functionality - Does the Learner have all the buttons required to complete the game, and are there accessible buttons, such as Play, Pause, Closed Captions?

Use of language - Is the wording clear and concise, and will the learner understand what they need to do?

Theory versus practice - Some ideas are great at the Storyboarding and Graphic Design stage, but don’t quite work when the code gets written. Has the storyboard translated well to a developed build, and is it hitting the brief of the concept?

Clear graphics - Are the graphics laid out in an organised way that looks professional but, importantly, makes clear to the Learner what they need to do?

Errors - Mistakes happen in any build but the QA process should pick up things like typos or grammatical issues?

Playability - Can the tester play it start to finish?

Branching - Does the gamelet branch off correctly for Correct and Incorrect responses, and are there any loops where a Learner gets stuck?

Simplicity - Are there ways of simplifying the gamelet’s language, functionality or graphics so that it is simple and easy to play?

Bugs - Have we found any bugs within Storyline that might need us to reconsider how we’ve built part of the gamelet? In this example, we had! We found that the animated character wasn’t caching as expected so we had to find a better way to present that animation without the character momentarily disappearing from the screen while the user played the game. We will follow up with a separate blog on this since other people may find it helpful.

Running the QA process

We have a team of people with different types of expertise and run a very flat structure where anyone can voice an opinion…. or even disagreement. This can occasionally be challenging to manage since no-one's opinion is universally final, but we’re building these assets as a team and find that the potential for disagreement far outweighs getting to the end of the build and finding that someone should, or did, voice disagreement earlier in the process.

While feedback can tend to be focused on the things that have been missed, addressing problems or identifying areas that need improving, it’s also important to publicly identify things that have gone well when a fantastic job has been done.

Thank you for reading our content, and please feel free to share content if it is helpful to other people.

You can follow us on LinkedIn for regular updates and get in touch by email - team@konnektis.com, phone - +44 (0)330 043 0096, or with the Contact Form below if you would like to speak to one of the team.